AI in hiring can unintentionally replicate biases from historical data, impacting fairness and diversity. To combat this, AI tools analyze data patterns, test for fairness, and track accuracy. Key areas where bias often occurs include resume screening, job descriptions, and AI-powered interviews. Challenges include overlapping bias types, evolving fairness standards, and balancing speed with accuracy.

Key Takeaways:

- Methods to Detect Bias: Data pattern analysis, fairness testing, and accuracy tracking.

- Bias-Prone Areas: Resume reviews, job descriptions, and AI interviews.

- Solutions: Use diverse training data, incorporate human oversight, and conduct regular system reviews.

By combining automation with human input and ongoing monitoring, companies can create more equitable hiring processes.

How AI Detects Bias in Hiring

Analyzing Data Patterns

AI systems rely on statistical techniques to identify bias in hiring data. By examining historical hiring decisions, these systems can spot patterns that may unfairly impact groups based on gender, age, ethnicity, or other protected characteristics. Methods like distribution analysis track how different demographic groups move through hiring stages, while correlation testing looks for links between candidate traits and hiring results. Another method, variance comparison, checks if decisions are consistent across groups. For instance, if candidates with similar qualifications receive different scores, the system flags the issue as a potential bias hotspot. These insights provide a solid base for further testing.

Testing Algorithms for Fairness

To ensure fairness, AI systems are tested using specific metrics such as equal opportunity, demographic parity, and individual fairness. Techniques like cross-validation with diverse datasets, adversarial testing, and comparisons with human decision-making help uncover systematic biases. This process ensures the algorithm works consistently across different scenarios. Once fairness is validated, additional accuracy metrics help fine-tune the system.

Tracking AI Accuracy

Metrics like the false positive rate, false negative rate, selection rate, and calibration score are crucial for evaluating system accuracy. Regular monitoring allows organizations to quickly detect emerging bias patterns and make adjustments to keep the system fair and effective.

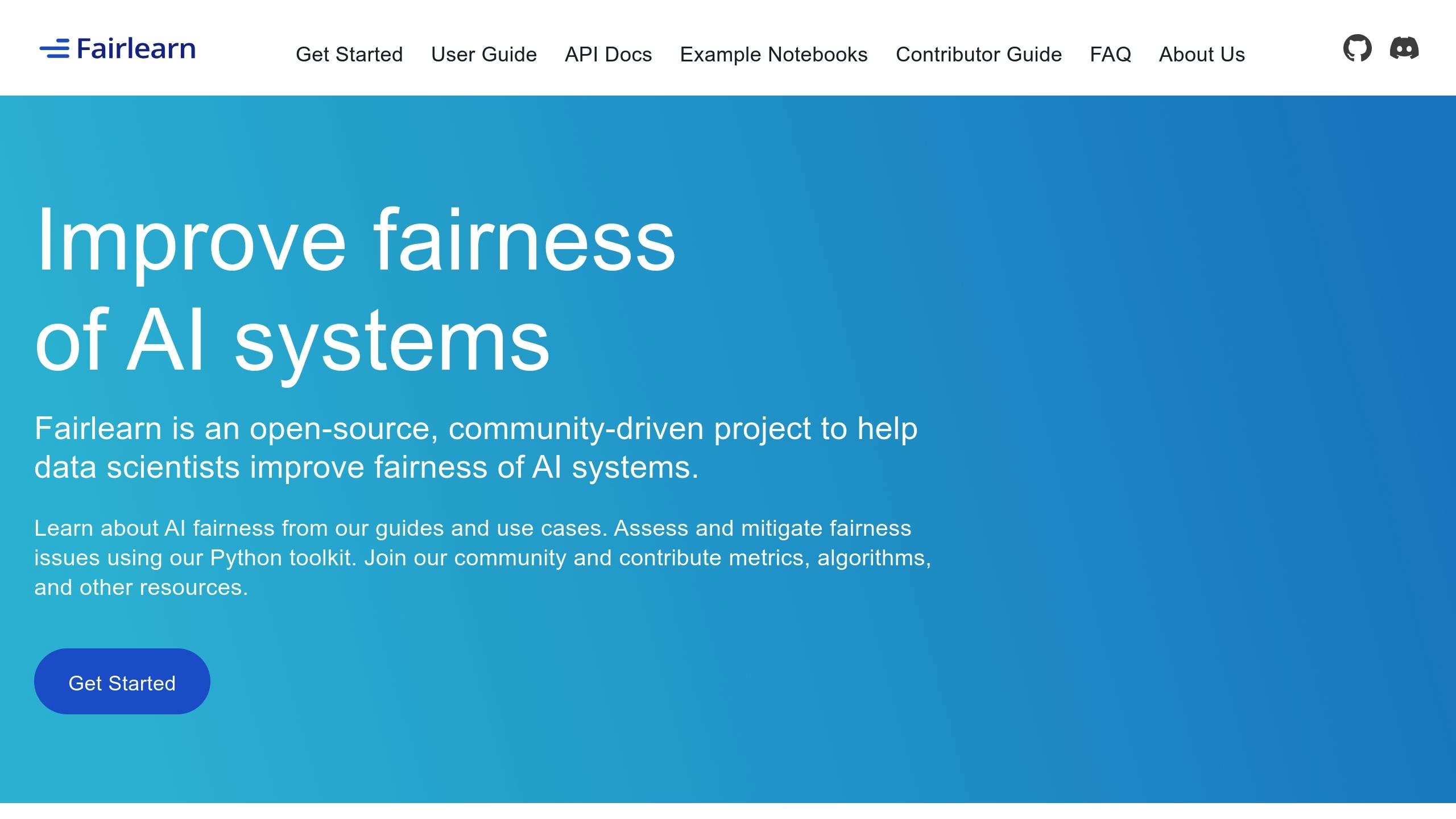

Bias detection tools for AI Models - Fairlearn and AIF360 - Live ...

Where Bias Appears Most Often

Understanding how bias creeps into hiring processes is just as important as knowing how to detect it. Here are some areas where bias tends to show up.

Resume Review Systems

Automated resume screening tools often rely on historical data, which can unintentionally favor candidates from specific educational backgrounds, like those from elite institutions. This creates hurdles for equally skilled candidates who might not fit that mold. Additionally, keyword matching systems can overlook relevant experience if it's described in unconventional ways, leading to unfair rankings.

Job Post Text Analysis

The way job descriptions are written can heavily influence who decides to apply. Certain words and phrases can unintentionally discourage specific groups of people. For example:

- Age bias: Words like "recent graduate" or "energetic" might signal a preference for younger applicants.

- Cultural bias: Language that assumes native-level fluency or uses idioms unfamiliar to non-native speakers can exclude qualified candidates.

- Socioeconomic bias: Requiring degrees or qualifications that aren't necessary for the role can filter out capable individuals from less privileged backgrounds.

AI Interview Evaluation

AI-powered interview tools aren't immune to bias either. Speech recognition software may struggle with accents, leading to lower scores for candidates who speak differently. Similarly, video-based evaluations can be affected by external factors like poor lighting or background settings, which might unfairly disadvantage candidates from less affluent environments. Even facial analysis tools can apply narrow cultural standards, failing to recognize diverse communication styles or expressions.

sbb-itb-96bfd48

Main Bias Detection Problems

Now that we've identified where bias often emerges, let's break down the main challenges in detecting it within AI hiring systems.

Overlapping Bias Types

Biases related to gender, age, language, or socioeconomic status often don't exist in isolation. They overlap and interact, making it hard to pinpoint and address each one separately. This overlap can amplify their impact, leading to skewed hiring outcomes.

Evolving Standards

Fairness isn't a static concept. Legal requirements and societal expectations around fairness are constantly shifting. This means AI systems need regular updates to stay aligned with these changes, which adds complexity to maintaining fair hiring practices.

Balancing Speed and Accuracy

Companies often face a tough choice: conduct detailed bias checks that slow down the hiring process, or prioritize speed, which risks missing subtle biases. Striking the right balance is a constant challenge.

These issues highlight the ongoing need for regular assessments and updates in AI hiring systems to keep up with societal changes and technological advancements.

Steps to Reduce AI Hiring Bias

After recognizing the challenges in detecting bias, let’s dive into actionable steps organizations can take to make their AI hiring systems fairer.

Diverse Training Data

To build fair hiring tools, start with a broad and well-rounded dataset. This means ensuring the data reflects a variety of demographics, career paths, and experiences. When working with historical hiring data, pay attention to:

- Education levels

- Career trajectories

- Industry backgrounds

- Geographic diversity

Using data that represents a wide range of perspectives helps create a solid foundation for fairer AI systems.

Incorporating Human Oversight

AI alone can't catch everything - human reviewers are essential. Their role includes:

- Reviewing cases flagged as uncertain or complex

- Evaluating qualitative data that algorithms might overlook

- Providing feedback to refine the system over time

Striking the right balance between automation and human input is crucial. Regular calibration sessions between the team and the AI system can help pinpoint where bias may arise and ensure consistent standards are applied.

Regular System Reviews

Keeping your system fair requires ongoing attention. Here’s how to stay on track:

- Monthly audits: Analyze candidate demographics, success rates, and the effects of any algorithm changes.

- Feedback loops: Gather input from hiring managers and employees to spot issues early.

- Compliance updates: Adjust the system to reflect new laws and fairness guidelines.

Frequent checks and updates ensure your AI hiring tools stay aligned with fairness goals and evolving standards.

Conclusion

AI is transforming the hiring process, but addressing bias remains a critical challenge. Through data analysis and thorough testing, AI can both identify and address fairness concerns. Earlier sections outlined how analyzing data patterns and testing algorithms play a key role in making these improvements. To effectively detect and minimize bias, organizations should focus on:

- High-quality data: Training AI with diverse and representative datasets.

- Transparency: Making AI decision-making processes clear and open to review.

- Ongoing monitoring: Ensuring the system continues to perform as intended over time.

These practices form the foundation for improving AI-driven hiring systems.

Looking ahead, AI in hiring is evolving with some promising advancements, including:

- Real-time bias detection: Systems that flag potential issues before they affect decisions.

- Fairness metrics: Standardized tools to measure and compare bias levels across systems.

- Adaptive learning: AI that adjusts to shifts in workforce demographics automatically.

The key to success lies in blending automation with human oversight and continuously refining AI to meet changing diversity goals. The ultimate objective is to create hiring processes that are fair and inclusive. Tools like JobSwift.AI are already making strides by streamlining applications while encouraging transparency and equity in hiring.